- #Edit mode spark for mac install#

- #Edit mode spark for mac update#

- #Edit mode spark for mac code#

- #Edit mode spark for mac professional#

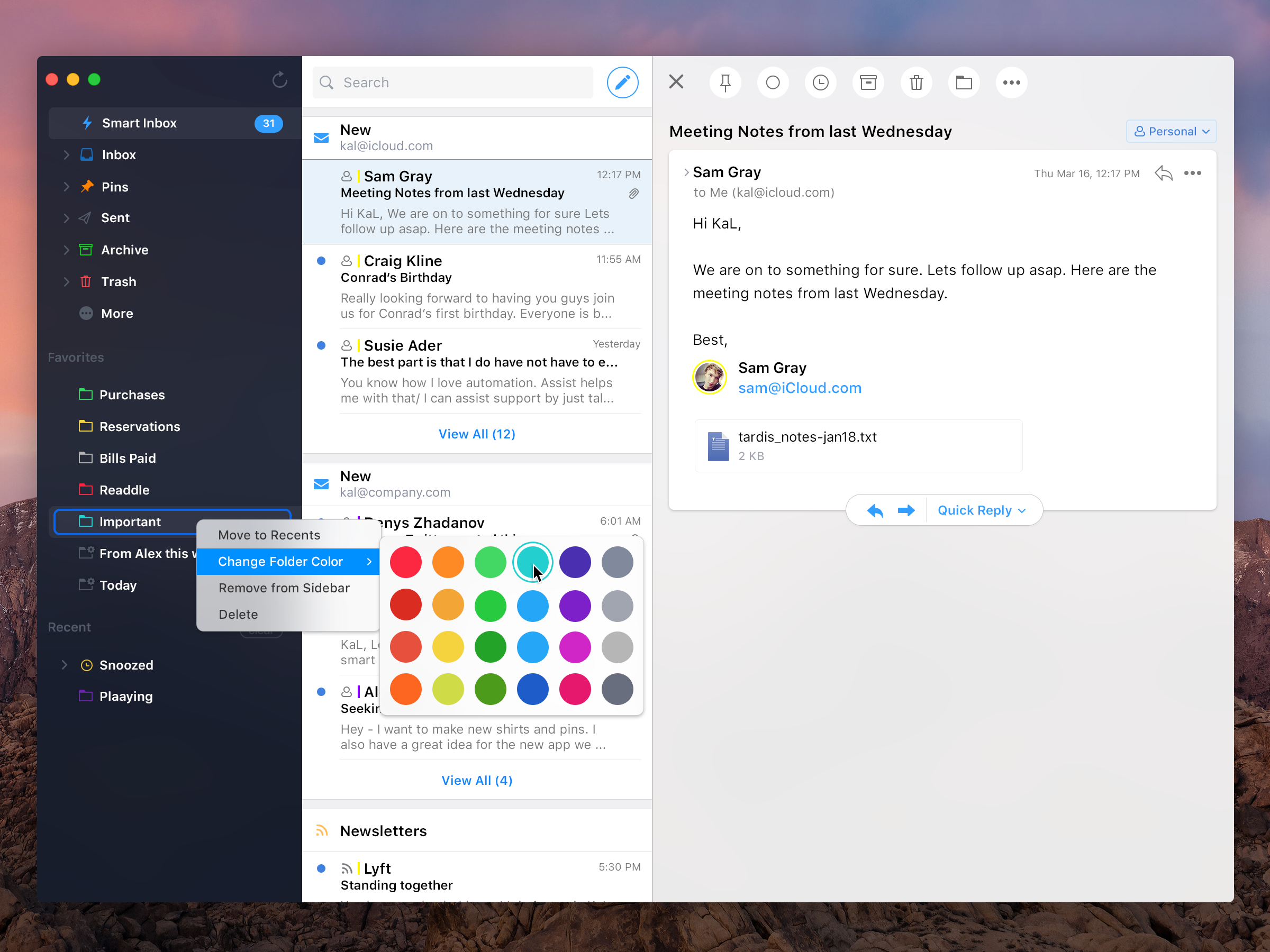

Just search the way you think and let Spark do the rest. Powerful, natural language search makes it easy to find that email you're looking for. Snoozing works across all your Apple devices. Snooze an email and get back to it when the time is right. It works even if your device is turned off. Schedule emails to be sent when your recipient is most likely to read them.

#Edit mode spark for mac professional#

Create email togetherįor the first time ever, collaborate with your teammates using real-time editor to compose professional emails. Ask questions, get answers, and keep everyone in the loop. Invite teammates to discuss specific emails and threads. All new emails are smartly categorized into Personal, Notifications and Newsletters.

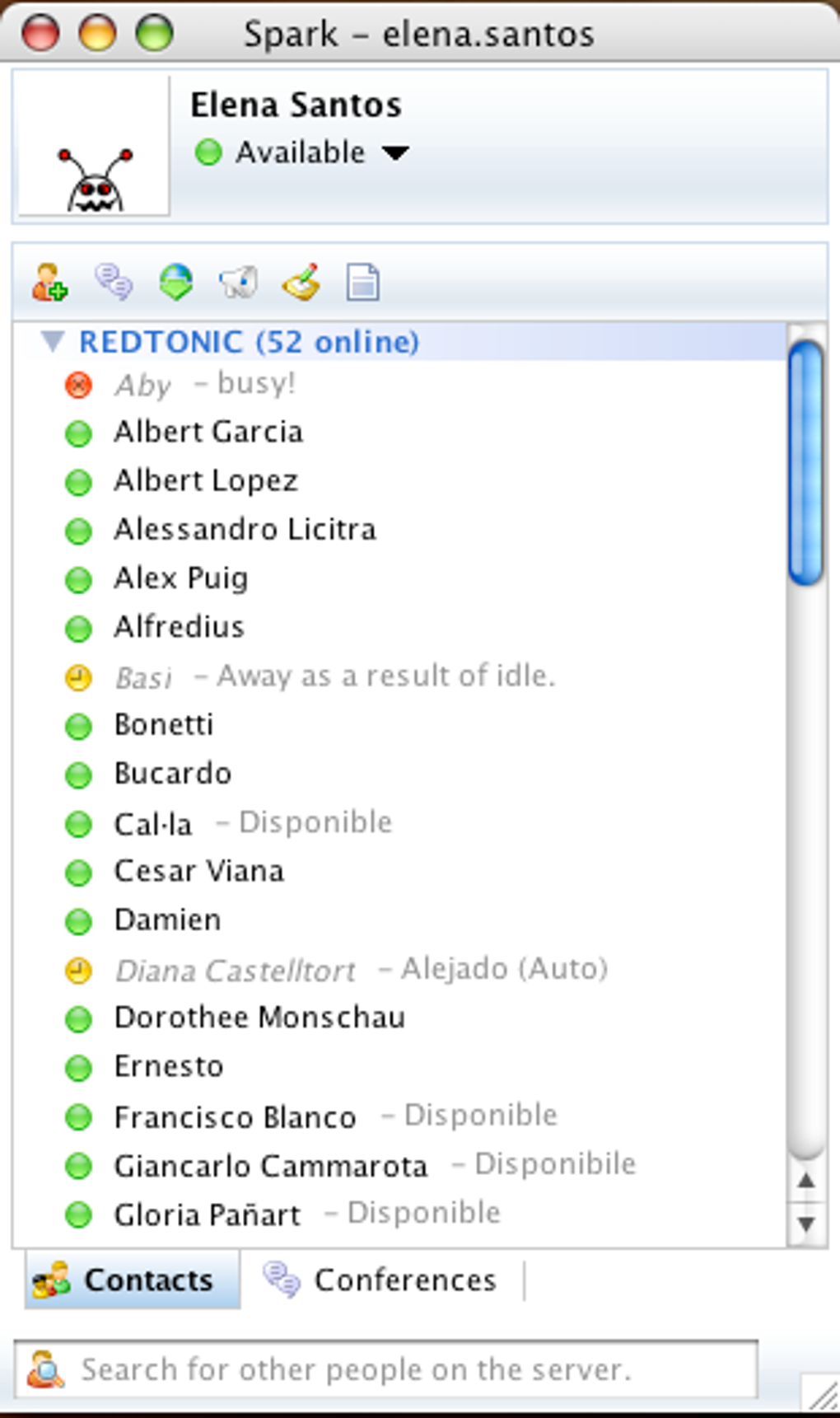

Smart Inbox lets you quickly see what's important in your inbox and clean up the rest. Modern design, fast, intuitive, collaborative, seeing what’s important, automation and truly personal experience that you love - this is what Spark stands for. You will love your email again! Beautiful and Intelligent Email App In this case, the solution worked if I executed pyspark from the command line but not from VSCode's notebook.Spark is the personal email client and a revolutionary email for teams.

Remember to always reload the configuration via source. bashrc files the following content export PATH=$PATH:/home/marti/binĮxport SPARK_HOME=/your/spark/installation/location/here/sparkĮxport PYTHONPATH=$PYTHONPATH:$SPARK_HOME/python:$SPARK_HOME/python/lib The first option to fix it is to add to your. Ubuntu 20.04), pyspark will mostly fail with a message error like pysparkenv: 'python': No such file or directory. If you are in a distribution that by default installs python3 (e.g.

#Edit mode spark for mac code#

Run your pyspark codeĬreate a new file or notebook in VS Code and you should be able to execute and get some results using the Pi example provided by the library itself. Note: depending on your installation, the command changes to pip3.

#Edit mode spark for mac install#

Then, open a new terminal and install the pyspark package via pip $ pip install pyspark. Follow the Set-up instructions and then install python and the VSCode Python extension. One of the good things of this IDE is that allows us to run Jupyter notebooks within itself. Type in expressions to have them evaluated. Using Scala version 2.12.10 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_101) Spark context available as 'sc' (master = local, app id = local-1607425417256). For SparkR, use setLogLevel(newLevel).Ģ0/12/08 12:03:37 WARN Utils: Service 'SparkUI' could not bind on port 4040.

To adjust logging level use sc.setLogLevel(newLevel). Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties using builtin-java classes where applicable $ spark-shellĢ0/12/08 12:03:29 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform. If this worked, you will be able to open an spark shell. Save the file and load the changes executing $ source ~/.bashrc.

export SPARK_HOME="/Users/myuser/bigdata/spark"Įxport PYTHONPATH="$PYTHONPATH:$SPARK_HOME/python:$SPARK_HOME/python/lib"

#Edit mode spark for mac update#

Then we will update our environment variables so we can execute spark programs and our python environments will be able to locate the spark libraries. bashrc file, located in the home of your user $ nano ~/.bashrc The path I'll be using for this tutorial is /Users/myuser/bigdata/spark This folder will contain all the files, like this Spark folder content Remember to click on the link of step 3 instead of the green button.ĭownload it and extract it in your computer. We will download the latest version currently available at the time of writing this: 3.0.1 from the official website. We assume you already have knowledge on python and a console environment. This tutorial applies to OS X and Linux systems. After reading this, you will be able to execute python files and jupyter notebooks that execute Apache Spark code in your local environment.

0 kommentar(er)

0 kommentar(er)